HybridSDF: Combining Deep Implicit Shapes and Geometric Primitives for 3D Shape Representation and Manipulation

Subeesh Vasu*,1,

Nicolas Talabot*,1,

Artem Lukoianov1,2,

Pierre Baqué2,

Jonathan Donier2,

Pascal Fua1

* Equal contributions

1 CVLab, EPFL

2 Neural concept

1 CVLab, EPFL

2 Neural concept

3DV 2022

Abstract

Deep implicit surfaces excel at modeling generic shapes but do not always capture the regularities present in manufactured objects, which is something simple geometric primitives are particularly good at. In this paper, we propose a representation combining latent and explicit parameters that can be decoded into a set of deep implicit and geometric shapes that are consistent with each other. As a result, we can effectively model both complex and highly regular shapes that coexist in manufactured objects. This enables our approach to manipulate 3D shapes in an efficient and precise manner.Hybrid Representation

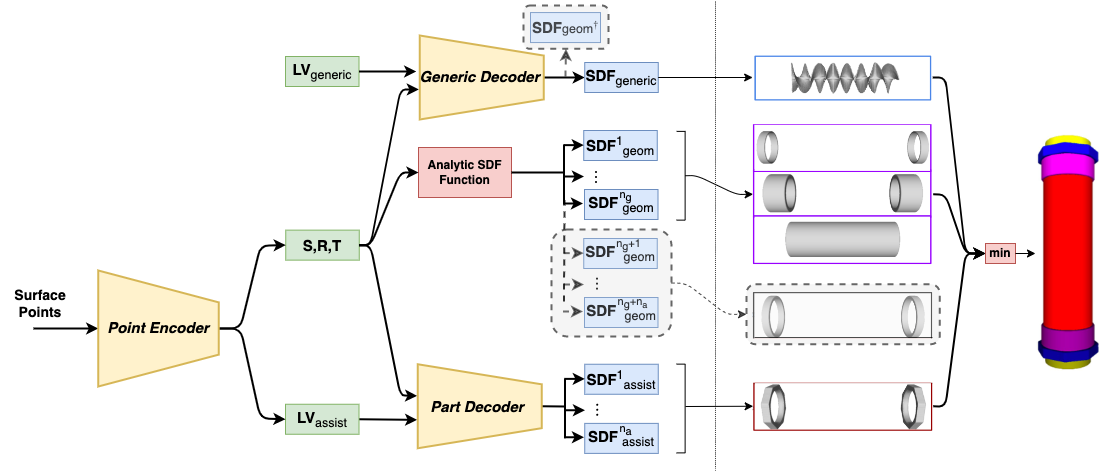

We propose a hybrid implicit representation that combines geometric primitives and deep implicit surfaces. To this end, we define three types of primitives represented by their Signed Distance Functions (SDFs):- Generic primitives that may have arbitrarily complex shapes. Their SDFs are computed by neural networks; they are therefore purely deep implicit surfaces.

- Geometric primitives, such as spheres and cylinders. Their SDFs are computed analytically given some geometric parameters.

- Geometry-assisted primitives that resembles geometric ones but can deviate from them, such as car wheels that are almost but not quite cylinders. Their SDFs are computed by neural networks but are constrained to be similar to those of the corresponding geometries.

Network

Figure: HybridSDF architecture. A Point Encoder predict the parameters of the parts while multiple branches decode the different primitives' SDFs, which are combined to reconstruct the full shape. Grey boxes denote components used only during training.

The shapes are decoded from a combination of implicit and explicit parameters: latent vectors LV and geometric parameters S, R, and T that are respectively shape parameters (such as sphere radius), rotations, and translations. Different decoding branches output the SDFs of the three primitive types, which are then combined to obtain the full shape.

Reconstruction

Our representation is interpretable in terms of parts that have a semantic meaning and enforces consistency between them at no loss of accuracy. Additionally, it yields an increase in local regularity and realism of the geometric and geometry-assisted parts.

Parametric Manipulation

Using HybridSDF, one can manipulate 3D objects by directly editing the geometric parameters S, R, and T. The disentanglement of the parameter space makes it easy to define these specifications for several parts and have the rest of the object adapts to them. Moreover, the manipulation requires no optimization and is therefore fast and exact.BibTeX

If you find our work useful, please cite it as: @inproceedings{vasutalabot2022hybridsdf, author = {Vasu, Subeesh and Talabot, Nicolas and Lukoianov, Artem and Baqu\'e, Pierre and Donier, Jonathan and Fua, Pascal}, title = {HybridSDF: Combining Deep Implicit Shapes and Geometric Primitives for 3D Shape Representation and Manipulation}, booktitle = {International Conference on 3D Vision}, year = {2022} }References

[DeepSDF]

J. J. Park, P. Florence, J. Straub, R. A. Newcombe, and S. Lovegrove. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In Conference on Computer Vision and Pattern Recognition, 2019.

[NeuralParts]

D. Paschalidou, A. Katharopoulos, A. Geiger, and S. Fidler. Neural Parts: Learning Expressive 3D Shape Abstractions with

Invertible Neural Networks. In Conference on Computer Vision and Pattern Recognition, pages 3204--3215, 2021.